Of course a “record” depends on fields and content. And there is system overhead and such. So any number would be “in a ballpark”. But just for an estimate easy to scale up. if a database only had a datasheet, and one text field with ten-character content (change the numbers if necessary for easier math). What would be a practical (meaning not right at the edge of possibility) number of records that database could hold, given the Mac has 8GB or16GB RAM? I am running Ventura (OS 13).

A quick experiment showed a new database with one empty record was 6,638 bytes and 6,648 bytes with 10 characters entered into that one field of the single record. Adding a second record with the same 10 characters entered into that field was 6,671 bytes or an additional 23 bytes for the new record. You do the math…

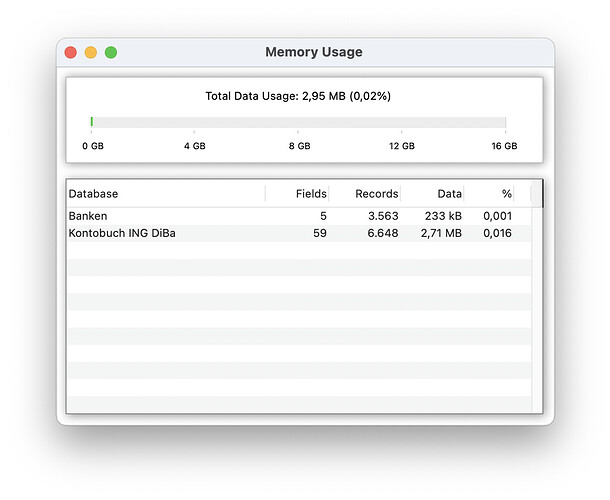

You can easily measure it. I suppose you have similar databases. Open some of your databases and have a look in the menu Panorama X > Memory Usage…. Here is an example screenshot.

There are seven bytes of overhead per record, and one byte per cell (these can be slightly higher if the data is larger, but for typical data this works. So that would be 18 bytes per record in your example. At that rate you could get 59.6 million records in a gigabyte. So I’d think a quarter billion to half billion records would be do-able in 8-16 GB.

Of course ten characters is pretty small for most data sets. If you’re data averaged 100 characters per record, that would be in the range of 25-50 million records.

I do know of one user that was dealing with databases over 60Gb. This was on a Mac Pro with 1.5Tb of memory.

My txt file of one field, 10 characters - base10 permutations - was 40MB. It had 3,628,800 records and seemed to take less that 1% of available RAM (MacMini, Ventura, 8BG)

I tried my Base11 permutations txt file - 480GB, 39,916,800 records. The beachball was spinning for a while. First on the desktop, but when I moved the mouse it disappeared. Because it was taking a while, and I didn’t see the beachball. I went to ForceQuit and it said Panorama was non-responsive. But I was sure I had enough RAM; just not enough patience

So I tried again because I wanted to see if just moving the mouse disappeared the beachball. It did. but hovering over the Panorama menu brought it back. Note that at the beginning, when I selected the txt file, Panorama correctly displayed partial content in the OpenFile dialog window.

It finished reading all the records just fine and is using about 10.6% of my RAM.

Initially, I thought it was faster to write out the file of permutations ahead of time - a one-time event - and then read them in as needed. But that thinking is from an era where CPU speeds were listed in the single-digit Mhz range or less. Now I know I can run an algorithm that will generate all those permutations on the fly - in code - in a few seconds; much faster than it takes to read the file.

If I ever have to deal with base15 factorial permutations (1,307,674,400,000), it’s good to know they can be generated on the fly within a practical time.

The txt file was 479MB and the saved Panorama file was 918MB.

So that’s 9 extra bytes per record, I guess I was off by one.

For your very short records, the overhead makes the data almost twice as big as a text file. If the records were longer, the percentage of overhead would be much less.

Maybe. Keep in mind, however, that importing a text file takes a LOT longer than opening a database that has already been imported and saved in Panorama format. I think even your 3.6 million record database will open pretty quickly once you have saved it as a database.